Read More ↓

Synthetic Intelligence

The long-term implications of machine intelligence for philosophy, science, and design

Yes, you are a stochastic parrot

from The Model is the Message by Blaise Aguera Y Arcas and Benjamin Bratton

Historically AI and the Philosophy of AI have evolved in a tight coupling, informing and delimiting one another. The conceptual vocabulary provided–such as the Turing Test–is not sufficient to articulate what artificial language is and is not. People see themselves and their society in the reflection AI provides, and are thrilled and horrified by what it portends. But this reflection is also preventing people from understanding AI, its potential, and its relationship to human and non-human societies. A new framework is needed to understand the possible implications.

Synthetic Intelligence traces the implications of artificial intelligence, particularly through cognitive infrastructures such as large language models. Antikythera explores the path cleared for the philosophy of synthetic intelligence through the externalization of thought in technical systems. At societal scale, these are cognitive infrastructures, distributed networks that not only relay and process information but are capable of creativity, analysis and reason.

What is reflected back is not necessarily human-like. The view is beyond anthropomorphic notions of AI and toward fundamental concern with machine intelligence as, in the words of Stanisław Lem, an existential technology.

Specific areas of focus include large foundational models, natural and artificial language, models of mind, animal cognition, urban automation, machine sensing, anthropic bias, and the global history of machine intelligence beyond standard Western narratives. The questions posed are drawn from diverse cultures and contexts but also invented in concert with the ongoing evolution of intelligence in all its guises.

The planetary future of AI extends and alters conceptions of both “intelligence” and “artificiality” and what the provenance of both may be.

Antikythera focuses on several emerging areas of Synthetic Intelligence research:

●

Limits of Anthropocentric models of AI

●

Planetary discourse on the future of AI

●

Wicked problems in AI Alignment

●

HAIID (Human-AI Interaction Design) as a Formal Discipline

●

AI and Planetary Sensing, Science and Sapience

●

Physicalized AI and Automated Systems

●

Simulations and Toy World Models

●

AI and Climate Sensing and Governance

●

Machine Learning and Scientific Epistemology

The emergence of machine intelligence must be steered toward planetary sapience in the service of viable long term futures. Instead of strong alignment with human values and superficial anthropocentrism, the steerage of AI means treating these humanisms with nuanced suspicion and recognizing its broader potential. At stake is not only what AI is, but what a society is, and what AI is for. What should align with what?

What does it mean to ask machine intelligence to “align” to human wishes and self-image? Is this a useful tactic for design, or a dubious metaphysics that obfuscates how intelligence as a whole might evolve? How should we rethink this framework in both theory and practice?

Synthetic intelligence refers to the wider field of artificially-composed intelligent systems that do and do not correspond to Humanism’s traditions. These systems, however, can complement and combine with human cognition, intuition, creativity, abstraction and discovery. Inevitably, both are forever altered by such diverse amalgamations.

After Alignment: Orienting Synthetic Intelligence Beyond Human Reflection

2023

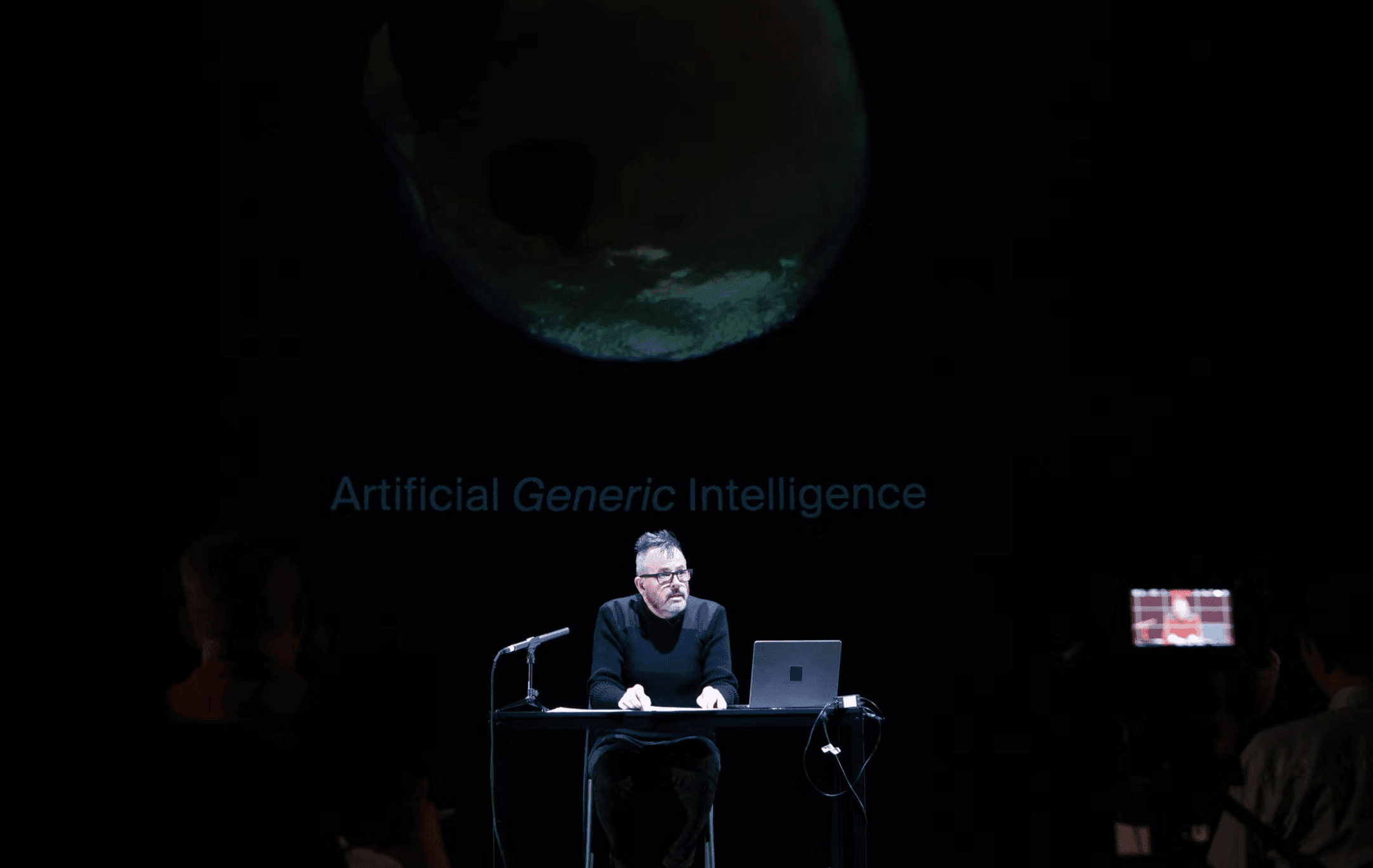

Benjamin H. Bratton

A public lecture at Central St. Martins University of the Arts London discussing shifts from AGI to artificial generic intelligence, the importance of recursive simulations, the decentering of personal data, the challenges of AI in science, intelligence as an evolutionary scaffold, the limitations of mainstream AI ethics, and why a planetary model of synthetic intelligence must drive its geopolitical project.

The Model is the Message

The Model is the Message explores the complex philosophical and practical issues posed by large language models as a core component of distributed AI systems. They are the heart of emerging cognitive infrastructures.

The essay, written just before the launch of ChatGPT, takes as its starting point the curious event of Google engineer Blake Lemoine being placed on leave after publicly releasing transcripts of conversations with LaMDA, a chatbot based on a Large Language Model (LLM) that he claimed to be conscious, sentient and a person. LaMDA may not be conscious in the ways that Lemoine believes it to be-–his inference is clearly based in motivated anthropomorphic projection– but indeed these kinds of artificial intelligence likely are “intelligent” in some specific and important ways. The real lesson for philosophy of AI is that reality has outpaced the available language to parse what is already at hand. A more precise vocabulary is essential.

The Model is the Message identifies “seven problems with synthetic language at platform scale":

1.

Machine Majority Language Problem

When the number of AI-powered things that speak human-based language outnumbers actual humans

2.

Ouroboros Language Problem

When language models are so pervasive that subsequent models are trained on language data that was largely produced by other models’ previous outputs

3.

Apophenia Problem

When people interacting with AI perceive intentionality and ascribe causality to AI far beyond what technology’s capabilities

4.

Artificial Epistemology Confidence Problem

When AI produces non-falsifiable interpretations of patterns in data that are so so counterintuitive that they are all but impossible to confirm

5.

Fuzzy Bright Line Problem

The augmentation of human intelligence with AI will result in amalgamations that make it impossible to police boundaries between them

6.

Availability Bias Problem

The present sourcing of data to train models is a tiny human-centric fraction of all the forms of signaling in the world that could be included in planetary models

7.

Carbon Appetite Problem

The energy and carbon footprint of training the largest models is significant (but often overstated) which focuses the question of what are the most important purposes to which large models should be put?

The Model Is The Message

2022

Benjamin H. Bratton & Blaise Aguera Y Arcas

The debate over whether LaMDA is sentient or not overlooks important issues that will frame debates about intelligence, sentience, language and human-AI interaction in the coming years.

Further

Cognitive Infrastructures

Synthetic Intelligence

Design-Development Studio

June 21st – July 19th 2024

Cognitive Infrastructures is the theme for the 2024 Synthetic Intelligence Design-Development Studio in London. As AI becomes both more general and more foundational, it shouldn’t be seen as a disembodied virtual brain. It is a real, material force. AI is embedded into the active, decision making systems of real world systems. As AI becomes infrastructural, infrastructures become intelligent.

Individual users will not only interact with big models, but multiple combinations of models will interact with groups of people in overlapping combinations. Perhaps the most critical and unfamiliar interactions will unfold between different AIs without human interference. Cognitive Infrastructures are forming, framing, and evolving a new ecology of planetary intelligence.

What kind of design space is this? What does it afford, enable, produce, and delimit? When AIs are simultaneously platforms, applications and users, what are the interfaces between society and its intelligent simulations? How can we understand AI Alignment not as just AI bending to society, but how societies evolve in relationship to AI? What kinds of Cognitive Infrastructures might be revealed and composed?

2024 Design-Development Studio, London

Antikythera’s Design Development Studio Cognitive Infrastructures will run from June 21st to July 19th 2024.

The studio will select 12-18 interdisciplinary, full time Studio Researchers–including engineers, designers, scientists, philosophers, writers and technologists–to develop speculative prototypes and propositions in response to briefs investigating the socialization of machine intelligences at planetary scale. The studio will be based at Central Saint Martins in Kings Cross, with special events including lectures, gatherings, and salons unfolding across London and environs. The Design-Development Studio will combine both collaborative software engineering driven by technical constraints with first-principles speculative and philosophical explorations of counterintuitive provocations and challenges in society-AI interaction.

Researchers will work with a network of Affiliate Researchers, including collaborators from Google Research/ Deepmind, Cambridge Centre for the Future of Intelligence, Cambridge Centre for Existential Risk, and many others. Special seminars, lectures and workshops will be hosted by BENJAMIN BRATTON, Director of Antikythera, BLAISE AGUERA Y ARCAS, VP Technology and Society, Google Research/ Deep Mind, THOMAS MOYNIHAN, Historian, CHEN QIUFAN, Science-fiction author, SARA WALKER, Astrophysicist and co-developer of Assembly Theory, and others.

Core Principles: Design & Philosophy for Speculative Synthetic Intelligence

Rather than applying philosophy to ideas about technology, Antikythera derives and develops philosophy from direct encounters with technology. Rather than approach Artificial Intelligence as the imitation of the human, Synthetic Intelligence starts with the emerging potential of machine intelligences.

Antikythera approaches the issues of Synthetic Intelligence though several core principles:

●

Computation is not just calculation, but the basis of a new global infrastructure of planetary computation remaking politics, economics, culture and science in its image.

●

The ongoing emergence of AI represents a fundamental evolution of that global infrastructure, from stacks based on procedural programming architectures, to ones based on training, serving and interacting with large models: from The Stack to AI Stack.

●

Machine intelligence is less a discrete artificial brain than the pervasive animation of distributed information sensing and processing infrastructures.

●

”Antikythera” refers to computation as both an instrumental technology–a technology that allows us to do new things, as well as an existential technology–a technology that discloses and reveals underlying conditions.

●

As existing technologies have outpaced legacy theory, philosophy is not something to be applied to or projected upon technology, but something to be generated from direct, exploratory encounters with technology.

What are Cognitive Infrastructures?

The studio’s core hypothesis is that as artificial intelligence becomes infrastructural, and as societal infrastructures concurrently become more cognitive, the relation between AI theory and practice needs realignment. Across scales – from world-datafication and data visualization to users and UI, and back again – many of the most interesting problems in AI design are still embryonic.

Natural Intelligence emerges at environmental scale and in the interactions of multiple agents. It is located not only in brains but in active landscapes. Similarly, artificial intelligence is not contained within single artificial minds but extends throughout the networks of planetary computation: it is baked into industrial processes; it generates images and text; it coordinates circulation in cities; it senses, models and acts in the wild.

This represents an infrastructuralization of AI, but also a ‘making cognitive’ of both new and legacy infrastructures. These are capable of responding to us, to the world and to each other in ways we recognize as embedded and networked cognition.

AI is physicalized, from user interfaces on the surface of handheld devices to deep below the built environment. As we interact with the world, we retrain model weights, making actions newly reflexive in knowing that performing an action is also a way of representing it within a model. To play with the model is to remake the model, increasingly in real time.

How might this frame human-AI interaction design? What happens when the production and curation of data is for increasingly generalized, multimodal and foundational, models? How might the collective intelligence of generative AI make the world not only queryable, but re-composable in new ways? How will simulations collapse the distances between the virtual and the real? How will human societies align toward the insights and affordances of artificial intelligence, rather than AI bending to human constructs? Ultimately, how will the inclusion of a fuller range of planetary information, beyond traces of individual human users, expand what counts as intelligence?

Studio Development Briefs

Antikythera’s Cognitive Infrastructures studio will unfold from several interrelated speculative briefs for intellectual and practical exploration:

●

CIVILIZATIONAL OVERHANG AND PRODUCTIVE DISALIGNMENT

AI overhang affects not only narrow domains, but also arguably, civilizations, and how they understand and register their own organization–past, present, and future. As a macroscopic goal, simple “alignment” of AI to existing human values is inadequate and even dangerous. The history of technology suggests that the positive impacts of AI will not arise through its subordination to or mimicry of human desires. Productive disalignment–bending society toward fundamental insights of AI– is just as essential.

●

HAIID: HUMAN-AI INTERACTION DESIGN

HAIID is an emerging field, one that contemplates the evolution of Human-Computer Interaction in a world where AI can process complex psychosocial phenomena. Anthropomorphization of AI often leads to weird "folk ontologies" of what AI is and what it wants. Drawing on perspectives from a global span of cultures, mapping the odd and outlier cases of HAIID gives designers a wider view of possible interaction models.

●

TOY WORLD POLICIES

Toy Worlds allow AIs to navigate and manipulate low-dimensional virtual spaces as analogues for higher dimensional real world spaces, each space standing in for the other in sequence. In doing so, AIs learning means the adjustment of policies that focus and adapt their learned expertises. The sim-to-real gap can be rethought in two ways: recovering loss between low and high dimensions, and the agnostic transfer of policy from one domain to another.

●

EMBEDDINGS VISUALIZATION

The predictive intelligence of LLMs is based on the adjacencies of word embeddings in mind-altering, complex vector spaces. Different ways of visualizing embeddings are different ways of comprehending machine intelligence. Descriptive and generative models for this can be drawn from neural network and brain visualization, complex systems modeling, agent interaction mapping, semantic trees, and more.

●

GENERATIVE AI AND MASSIVELY-DISTRIBUTED PROMPTING

For generative AI, the distances between training data and prompt engineering can seem, at different times, to be vast or tiny. To train or to prompt are both forms of interaction with large models. As the collective intelligence of culture is transformed into weights, weights are activated by prompts shared across domains to produce new artifacts. Though interface culture tends to individuate interactions with models, there are many ways to design massively-distributed prompting, producing collective artifacts that mirror the societal-scale intelligence of training data.

●

MULTIMODAL LLM INTERFACES

The design space of interaction with multimodal LLMs is not limited to individual or group chat interfaces, but can include a diverse range of media inputs that can be combined to produce a diverse range of hybrid outputs. Redefining “language” as that which can be tokenized breaks down not only genre and media but multiplies and integrates forms of sensing (sight, sound, speech, text, movement, etc.) New interfaces may allow users both comprehension and composition of those hybrids.

●

DATA PROVENANCE AND PROVIDENCE: THE GOOD, THE POISONED, AND THE COLLAPSED

The future utility of LLMs as cognitive infrastructures may be undermined by model collapse caused by retraining on outputs, and model degradation caused by training on poisoned data. Like the Ouroborous, the model eats its own tail. Meanwhile, differentiation between human-generated and model-generated data and artifacts will become more difficult and complex. High quality domain-specific data is largely private and/or privatized and so not generally available for socially-useful models. Will synthetic data and techniques like federated learning become more essential in ensuring data quality, and if so what are the necessary systems?

●

THE PLANETARY ACROSS HUMAN AND INHUMAN LANGUAGES

The planetary computation we have today is not planetary enough. The tokenization of collective intelligence is limited by how the most important LLMs are trained largely on English, and on English produced by a relatively small slice of humans, which themselves are a small slice of information producing and consuming forms of life. Given the role of planetary AI for ecological sensing, monitoring and governance –not to mention fundamental science– the prospect of “organizing all the world’s information” takes on renewed urgency and complexity.

Applications

The interdisciplinary studio will combine philosophy design and code and so applications are encouraged from applicants with diverse expertises. These can include computer science, AI, philosophy, interaction design, architecture, systems design, economics, linguistics, history of science and technology, digital media, filmmaking and more.

Those interested in applying to the Cognitive Infrastructures Design-Development Studio in London should submit a CV, a PDF of Work Samples, a 1-minute candid Video Introduction, and an Application Form.

Applications are open until March 1st 2024. Selected applicants will be interviewed and notified of their participation in the studio by March 18th. The studio will be held full time, in-person at Central Saint Martins — University of the Arts London. The studio will begin with an orientation the evening of Friday June 21st, and then run full time from June 24th to July 19th 2024. Studio Researchers will receive a stipend of 4000 GBP for the duration of the 4-week studio, and should ensure they have the right to work in the UK. For any additional or related inquiries, contact us.

Work produced in Antikythera’s Design-Research and Design-Development Studios is not proprietary nor exclusive property of Antikythera. As a non-profit think tank, the goal is the active dissemination of ideas so that they may realize their fullest influence. Studio participants are invited to further develop ideas and projects incubated within the Studio, as Antikythera may continue projects and prototypes with or independent of original collaborators. All IP is shared and open for further development.

Application Timeline

Application REQUIREMENTS

① 1-2 page CV

② 10-page PDF of Work Samples

③ 1-minute Introduction Video

④ Application Form

The Studio will be held in-person in London. Participants receive a stipend and must have the right to work in the UK.